imdb nn-data

imdb 로딩

MAX_FEATURES <- 10000 imdb <- dataset_imdb(num_words=MAX_FEATURES) saveRDS(imdb, "imdb10000.rds")

dataset_imdb_word_index()

word_index <- dataset_imdb_word_index() # fawn 34701 ### word_index # List명: 특정단어, value:자주사용한 순위 word_index[['good']] word_index$'good' word_index[[84396]] # 49 str_which(names(word_index), "^good$") # 84396 word_index[84396] #$good #[1] 49 data.table(names(word_index), unlist(word_index)) %>% arrange(V2) # V1 V2 # 1: the 1 # 2: and 2 # 3: a 3 # 4: of 4 # 5: to 5 # --- # 88580: pipe's 88580 # 88581: copywrite 88581 # 88582: artbox 88582 # 88583: voorhees' 88583 # 88584: 'l' 88584

MAX_FEATURES 의미

imdb <- dataset_imdb(num_words=1) imdb %>% str() # List of 2 # $ train:List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:218] 2 2 2 2 2 2 2 2 2 2 ... # .. ..$ : int [1:189] 2 2 2 2 2 2 2 2 2 2 ... # .. ..$ : int [1:141] 2 2 2 2 2 2 2 2 2 2 ... # ... # ..$ y: int [1:25000] 1 0 0 1 0 0 1 0 1 0 ... # $ test :List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:68] 2 2 2 2 2 2 2 2 2 2 ... # .. ..$ : int [1:260] 2 2 2 2 2 2 2 2 2 2 ... # .. ..$ : int [1:603] 2 2 2 2 2 2 2 2 2 2 ... # ... # ..$ y: int [1:25000] 0 1 1 0 1 1 1 0 0 1 ...

MAX_FEATURES <- 100 imdb <- dataset_imdb(num_words=MAX_FEATURES) # List of 2 # $ train:List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:218] 1 14 22 16 43 2 2 2 2 65 ... # .. ..$ : int [1:189] 1 2 2 2 2 78 2 5 6 2 ... # .. ..$ : int [1:141] 1 14 47 8 30 31 7 4 2 2 ... # ... # ..$ y: int [1:25000] 1 0 0 1 0 0 1 0 1 0 ... # $ test :List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:68] 1 2 2 14 31 6 2 10 10 2 ... # .. ..$ : int [1:260] 1 14 22 2 6 2 7 2 88 12 ... # .. ..$ : int [1:603] 1 2 2 2 2 2 2 4 87 2 ... # ... # ..$ y: int [1:25000] 0 1 1 0 1 1 1 0 0 1 ...

imdb <- dataset_imdb(num_words=10) # List of 2 # $ train:List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:218] 1 2 2 2 2 2 2 2 2 2 ... # .. ..$ : int [1:189] 1 2 2 2 2 2 2 5 6 2 ... # .. ..$ : int [1:141] 1 2 2 8 2 2 7 4 2 2 ... # ... # \t..$ y: int [1:25000] 1 0 0 1 0 0 1 0 1 0 ... # $ test :List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:68] 1 2 2 2 2 6 2 2 2 2 ... # .. ..$ : int [1:260] 1 2 2 2 6 2 7 2 2 2 ... # .. ..$ : int [1:603] 1 2 2 2 2 2 2 4 2 2 ... # ... # ..$ y: int [1:25000] 0 1 1 0 1 1 1 0 0 1 ...

MAX_FEATURES <- 10000 imdb <- dataset_imdb(num_words=MAX_FEATURES) # List of 2 # $ train:List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:218] 1 14 22 16 43 530 973 1622 1385 65 ... # .. ..$ : int [1:189] 1 194 1153 194 8255 78 228 5 6 1463 ... # .. ..$ : int [1:141] 1 14 47 8 30 31 7 4 249 108 ... # ... # ..$ y: int [1:25000] 1 0 0 1 0 0 1 0 1 0 ... # $ test :List of 2 # ..$ x:List of 25000 # .. ..$ : int [1:68] 1 591 202 14 31 6 717 10 10 2 ... # .. ..$ : int [1:260] 1 14 22 3443 6 176 7 5063 88 12 ... # .. ..$ : int [1:603] 1 111 748 4368 1133 2 2 4 87 1551 ... # ... # ..$ y: int [1:25000] 0 1 1 0 1 1 1 0 0 1 ...

정수벡터 decoding

> imdb <- dataset_imdb() > imdb$train$x[[1]] [1] 1 14 22 16 43 530 973 1622 1385 65 458 4468 66 3941 4 173 36 256 5 25 100 [22] 43 838 112 50 670 22665 9 35 480 284 5 150 4 172 112 167 21631 336 385 39 4 [43] 172 4536 1111 17 546 38 13 447 4 192 50 16 6 147 2025 19 14 22 4 1920 4613 [64] 469 4 22 71 87 12 16 43 530 38 76 15 13 1247 4 22 17 515 17 12 16 [85] 626 18 19193 5 62 386 12 8 316 8 106 5 4 2223 5244 16 480 66 3785 33 4 [106] 130 12 16 38 619 5 25 124 51 36 135 48 25 1415 33 6 22 12 215 28 77 [127] 52 5 14 407 16 82 10311 8 4 107 117 5952 15 256 4 31050 7 3766 5 723 36 [148] 71 43 530 476 26 400 317 46 7 4 12118 1029 13 104 88 4 381 15 297 98 32 [169] 2071 56 26 141 6 194 7486 18 4 226 22 21 134 476 26 480 5 144 30 5535 18 [190] 51 36 28 224 92 25 104 4 226 65 16 38 1334 88 12 16 283 5 16 4472 113 [211] 103 32 15 16 5345 19 178 32 > imdb <- dataset_imdb(num_words=50) > imdb$train$x[[1]] [1] 1 14 22 16 43 2 2 2 2 2 2 2 2 2 4 2 36 2 5 25 2 43 2 2 2 2 2 9 35 2 2 5 2 4 2 2 2 2 2 2 39 4 2 [44] 2 2 17 2 38 13 2 4 2 2 16 6 2 2 19 14 22 4 2 2 2 4 22 2 2 12 16 43 2 38 2 15 13 2 4 22 17 2 17 12 16 2 18 [87] 2 5 2 2 12 8 2 8 2 5 4 2 2 16 2 2 2 33 4 2 12 16 38 2 5 25 2 2 36 2 48 25 2 33 6 22 12 2 28 2 2 5 14 [130] 2 16 2 2 8 4 2 2 2 15 2 4 2 7 2 5 2 36 2 43 2 2 26 2 2 46 7 4 2 2 13 2 2 4 2 15 2 2 32 2 2 26 2 [173] 6 2 2 18 4 2 22 21 2 2 26 2 5 2 30 2 18 2 36 28 2 2 25 2 4 2 2 16 38 2 2 12 16 2 5 16 2 2 2 32 15 16 2 [216] 19 2 32

1이나 2 값은 의미 없음.

### reverse_word_index ### 벡터명이 단어 벡터값 순위

reverse_word_index <- names(word_index) # 34701 fawn

names(reverse_word_index) <- word_index

decoded_review <- sapply(imdb$train$x[[1]], function(index){

# index 0, 1, 2 => reserved indices for "padding", "start of sequence", and "unknown"

word <- if(index>=3) reverse_word_index[[as.character(index-3)]]

if(!is.null(word)) word else "?"

})

> str_c(decoded_review, collapse = " ")

[1] "? this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert redford's is an amazing actor and now the same being director norman's father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for retail and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also congratulations to the two little boy's that played the part's of norman and paul they were just brilliant children are often left out of the praising list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all"

> str_c(decoded_review, collapse = " ")

[1] "? this film was just ? ? ? ? ? ? ? ? ? the ? they ? and you ? just ? ? ? ? ? is an ? ? and ? the ? ? ? ? ? ? from the ? ? ? as ? so i ? the ? ? was a ? ? with this film the ? ? ? the film ? ? it was just ? so ? that i ? the film as ? as it was ? for ? and ? ? it to ? to ? and the ? ? was ? ? ? at the ? it was so ? and you ? ? they ? if you ? at a film it ? have ? ? and this ? was ? ? to the ? ? ? that ? the ? of ? and ? they ? just ? ? are ? ? out of the ? ? i ? ? the ? that ? ? all ? ? are ? a ? ? for the ? film but ? ? are ? and ? be ? for ? they have ? ? you ? the ? ? was so ? ? it was ? and was ? ? ? all that was ? with ? all"

train / test dataset 나누기

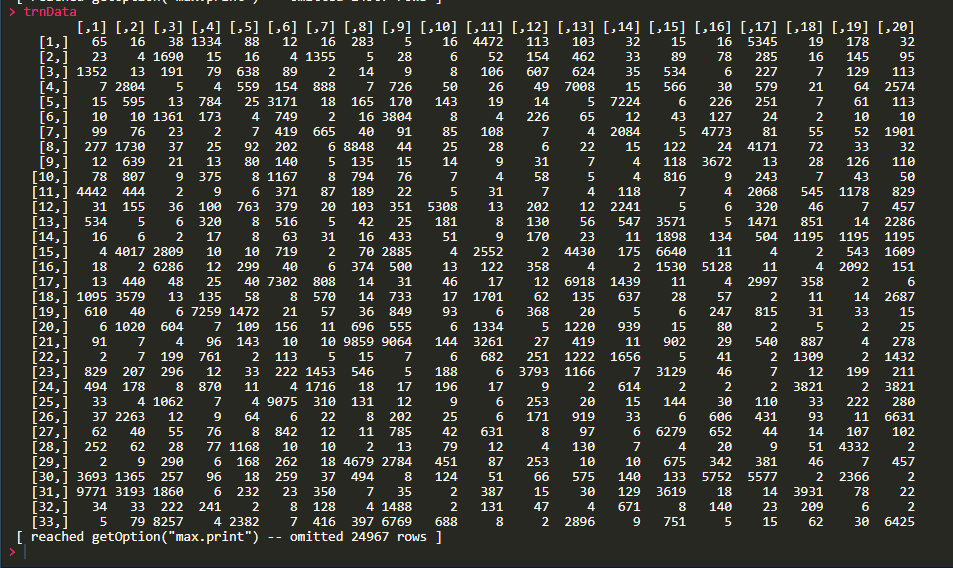

imdb <- readRDS(file.path(DATA_PATH,"imdb10000.rds")) c(c(trnData, trnLabels), c(tstData, tstLabels)) %<-% imdb trnData %>% length() # 25,000개의 samples을 가진 list tstData %>% length() # 25,000개의 samples을 가진 list # [1] 25000 trnLabels %>% table() # 0: Negative, 1: Positive # . # 0 1 # 12500 12500

Preprocessing

List -> Matrix

MAX_LEN <- 20 trnData <- pad_sequences(trnData, maxlen=MAX_LEN) tstData <- pad_sequences(tstData, maxlen=MAX_LEN)

IMDB movie-review sentiment-prediction task

- 일단 raw 데이터를 받아서,

- 감상평에서 , 많이 나온 순서대로 10,000개(feature로 생각되는 words 갯수)의 word만을 남겨둔다.

- 뒤에서부터 20 words 만 남기고 잘라낸다.

shape은 정수 List 에서 2D 정수 tentor 로 바뀐다. - Network은 10,000 words 대상으로 각각 8차원 embedding을 학습한다.

- 입력 2d tensor 를 embedding하여 3d tensor로 만든다.

- embedded 3d tensor를 flatten하여 2d tensor (sample, MAX_LEN*8)로 만든다.

- 분류를 위해 single dense layer을 학습한다.

source(file.path(getwd(),"../00.global_dl.R"))

### Title: IMDB binary classification --- --- --- -- --- --- --- --- --- --- ----

# Deep Learning with R by François Chollet :: 3.4 Classifying movie reviews

# 3.4 Classifying movie reviews: a binary classification example

MAX_FEATURES <- 10000

imdb <- dataset_imdb(num_words=MAX_FEATURES)

saveRDS(imdb, "imdb10000.rds")

word_index <- dataset_imdb_word_index() # fawn 34701

reverse_word_index <- names(word_index) # 34701 fawn

names(reverse_word_index) <- word_index

decoded_review <- sapply(trnData[[1]], function(index){

# index 0, 1, 2 => reserved indices for "padding", "start of sequence", and "unknown"

word <- if(index>=3) reverse_word_index[[as.character(index-3)]]

if(!is.null(word)) word else "?"

})

##1. Loading DATA ------------------------------------------------------- imdb <- readRDS(file.path(DATA_PATH,"imdb10000.rds")) c(c(trnData, trnLabels), c(tstData, tstLabels)) %<-% imdb MAX_LEN <- 20 trnData <- pad_sequences(trnData, maxlen=MAX_LEN) tstData <- pad_sequences(tstData, maxlen=MAX_LEN) model <- keras_model_sequential() %>% layer_embedding(input_dim=10000, output_dim=8, input_length=MAX_LEN) %>% layer_flatten() %>% layer_dense(units=1, activation="sigmoid")

model %>% compile(

optimizer = "rmsprop",

loss = "binary_crossentropy",

metrics = c("acc"))

history <- model %>% fit(trnData, tstData,

epochs = 10,

batch_size = 32,

validation_split = 0.2)