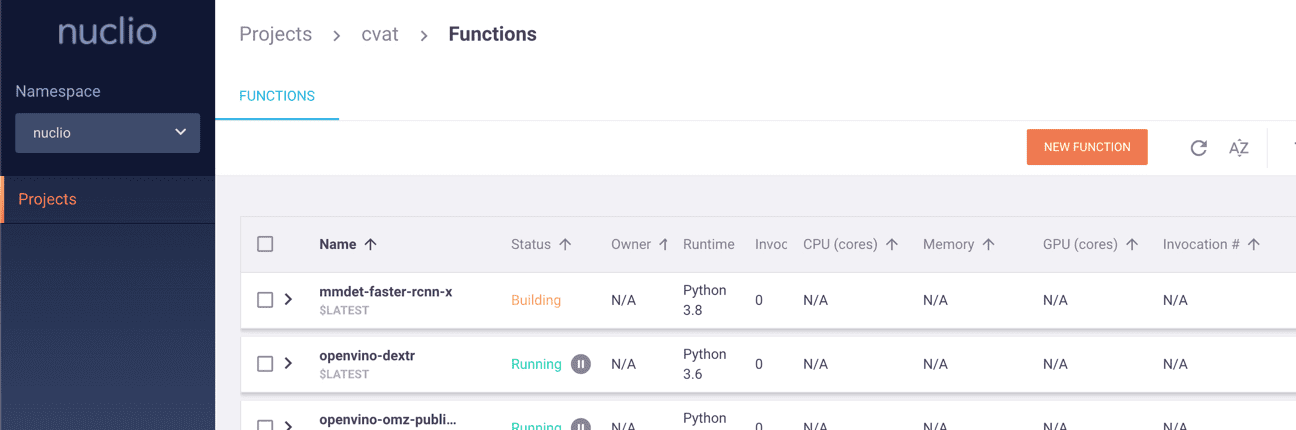

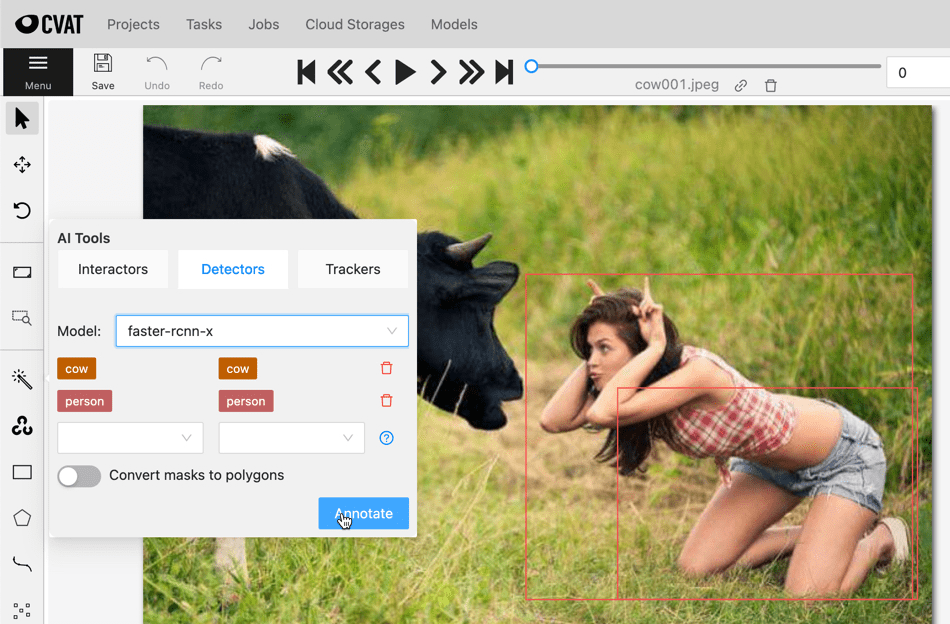

Nuclio 모델배포 : custom model

모델배포 : custom model – mmdetection

0. inference demo 확인

https://onesixx.com/inference-demo/

main.py의 handler작성을 위해 inference demo확인 ( init_detector, inference_detector, [show_result_pyplot])

https://onesixx.com/mmdet-inference/

demo/image_demo.py —[copy]—>demo_img.py

# ln -s /raid/templates/cvat cvat (mmdet안에 링크)

# ~/my/git/mmdetection/cvat/

# ~/my/git/mmdetection/cvat/serverless

# pwd

# ~/my/git/mmdetection/

$ python demo_img.py \\

demo/demo.jpg \\

configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py \\

checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth \\

--out-file demo/out/demo_out.jpg \\

--score-thr 0.4 \\

--device cuda:6

- Config파일 만들기 (work_dirs/faster_rcnn/faster_rcnn.py)

- CheckPoint파일 확인 (work_dirs/faster_rcnn/lastest.pth)

1. MakeConfig

$ python sixxtools/makeConfig_sixx.py \\ --fromconfig configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py \\ --toconfig sixxconfigs/faster_rcnn_r50_fpn_1x_coco_001.py

https://onesixx.com/mmdet-train/ 참고

Dataset 수집/구성/> Model 선정/checkpoint > config 수정 > Training

2. dockerfile

$ docker build -t base.me cvat/serverless/sixx/mmdet/faster_rcnn/nuclio/

~/my/git/mmdetection/docker$ docker build -t base.mmdet .

FROM ubuntu:20.04FROM ubuntu:20.04

ENV TZ=Asiz/Seoul

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

ENV PATH /opt/conda/bin:$PATH

RUN apt update

RUN apt install -y python3.8 \\

&& ln -s /usr/bin/python3.8 /usr/bin/python3

RUN apt install -y tzdata

RUN apt install -y --no-install-recommends w g e t git ninja-build ca-certificates libglib2.0-0 libsm6 libxrender-dev libxrender1 libxext6 python3.8-dev build-essential ffmpeg

# Install miniconda3

RUN w g e t --quiet https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda.sh && \\

/bin/bash ~/miniconda.sh -b -p /opt/conda && \\

rm ~/miniconda.sh && \\

/opt/conda/bin/conda clean -tipy && \\

ln -s /opt/conda/etc/profile.d/conda.sh /etc/profile.d/conda.sh && \\

echo ". /opt/conda/etc/profile.d/conda.sh" >> ~/.bashrc && \\

echo "conda activate my_env" >> ~/.bashrc

RUN conda create -n mmdet380 python=3.8

SHELL ["conda", "run", "-n", "mmdet380", "/bin/bash", "-c"]

RUN conda install -y pytorch torchvision -c pytorch

RUN conda install -y pip

RUN pip install --upgrade pip setuptools wheel

RUN pip install pycocotools

RUN pip install openmim

RUN mim install mmdet

ENTRYPOINT ["conda", "run", "-n", "mmdet380"]

function.yaml

metadata:

name: mmdet-faster-rcnn-x

namespace: cvat

annotations:

name: faster-rcnn-x

type: detector

framework: pytorch

spec: |

[

{ "id": 1, "name": "person" },

{ "id": 2, "name": "bicycle" },

{ "id": 3, "name": "car" },

{ "id": 4, "name": "motorcycle" },

{ "id": 5, "name": "airplane" },

{ "id": 6, "name": "bus" },

{ "id": 7, "name": "train" },

{ "id": 8, "name": "truck" },

{ "id": 9, "name": "boat" },

{ "id":10, "name": "traffic_light" },

{ "id":11, "name": "fire_hydrant" },

{ "id":13, "name": "stop_sign" },

{ "id":14, "name": "parking_meter" },

{ "id":15, "name": "bench" },

{ "id":16, "name": "bird" },

{ "id":17, "name": "cat" },

{ "id":18, "name": "dog" },

{ "id":19, "name": "horse" },

{ "id":20, "name": "sheep" },

{ "id":21, "name": "cow" },

{ "id":22, "name": "elephant" },

{ "id":23, "name": "bear" },

{ "id":24, "name": "zebra" },

{ "id":25, "name": "giraffe" },

{ "id":27, "name": "backpack" },

{ "id":28, "name": "umbrella" },

{ "id":31, "name": "handbag" },

{ "id":32, "name": "tie" },

{ "id":33, "name": "suitcase" },

{ "id":34, "name": "frisbee" },

{ "id":35, "name": "skis" },

{ "id":36, "name": "snowboard" },

{ "id":37, "name": "sports_ball" },

{ "id":38, "name": "kite" },

{ "id":39, "name": "baseball_bat" },

{ "id":40, "name": "baseball_glove" },

{ "id":41, "name": "skateboard" },

{ "id":42, "name": "surfboard" },

{ "id":43, "name": "tennis_racket" },

{ "id":44, "name": "bottle" },

{ "id":46, "name": "wine_glass" },

{ "id":47, "name": "cup" },

{ "id":48, "name": "fork" },

{ "id":49, "name": "knife" },

{ "id":50, "name": "spoon" },

{ "id":51, "name": "bowl" },

{ "id":52, "name": "banana" },

{ "id":53, "name": "apple" },

{ "id":54, "name": "sandwich" },

{ "id":55, "name": "orange" },

{ "id":56, "name": "broccoli" },

{ "id":57, "name": "carrot" },

{ "id":58, "name": "hot_dog" },

{ "id":59, "name": "pizza" },

{ "id":60, "name": "donut" },

{ "id":61, "name": "cake" },

{ "id":62, "name": "chair" },

{ "id":63, "name": "couch" },

{ "id":64, "name": "potted_plant" },

{ "id":65, "name": "bed" },

{ "id":67, "name": "dining_table" },

{ "id":70, "name": "toilet" },

{ "id":72, "name": "tv" },

{ "id":73, "name": "laptop" },

{ "id":74, "name": "mouse" },

{ "id":75, "name": "remote" },

{ "id":76, "name": "keyboard" },

{ "id":77, "name": "cell_phone" },

{ "id":78, "name": "microwave" },

{ "id":79, "name": "oven" },

{ "id":80, "name": "toaster" },

{ "id":81, "name": "sink" },

{ "id":83, "name": "refrigerator" },

{ "id":84, "name": "book" },

{ "id":85, "name": "clock" },

{ "id":86, "name": "vase" },

{ "id":87, "name": "scissors" },

{ "id":88, "name": "teddy_bear" },

{ "id":89, "name": "hair_drier" },

{ "id":90, "name": "toothbrush" }

]

spec:

description: faster-rcnn-x

runtime: "python:3.8"

handler: main:handler

eventTimeout: 30s

build:

image: cvat/sixx.mm.fast

baseImage: makeimg #competent_dubinsky #mytag #base.bkseo

directives:

preCopy:

- kind: USER

value: root

- kind: WORKDIR

value: /opt/nuclio

- kind: ENV

value: PATH="/root/miniconda3/bin:${PATH}"

- kind: ARG

value: PATH="/root/miniconda3/bin:${PATH}"

- kind: ENTRYPOINT

value: '["conda", "run", "-n", "mmdet380"]'

triggers:

myHttpTrigger:

maxWorkers: 2

kind: "http"

workerAvailabilityTimeoutMilliseconds: 10000

attributes:

maxRequestBodySize: 33554432 # 32MB

platform:

attributes:

restartPolicy:

name: always

maximumRetryCount: 3

mountMode: volume

외부에서 해당 모델을 통해 접근을 위한 설정

nuclio function의 포트번호와 cvat network를 지정

spec.triggers.myHttpTrigger.attributes에 특정 port를 추가하고, spec.platform.attributes 에 도커 컴포즈를 실행했을 때 생성된 도커 네트워크(cvat_cvat)를 추가.

triggers:

myHttpTrigger:

maxWorkers: 2

kind: 'http'

workerAvailabilityTimeoutMilliseconds: 10000

attributes:

maxRequestBodySize: 33554432

port: 33000 # 추가포트

platform:

attributes:

restartPolicy:

name: always

maximumRetryCount: 3

mountMode: volume

network: cvat_cvat # 추가 도커네트웍

Nuclio에 적용

2. 로컬에서 DL모델을 실행하기 위한 소스코드를 Nuclio 플랫폼에 적용

2-1 모델을 메모리에 로딩 (init_context(context)함수를 사용하여)

def init_context(context):

# Create the DB connection under "context.user_data"

# setattr(context.user_data, 'my_db_connection', my_db.create_connection())

cfg = get_config('COCO-Detection/retinanet_R_101_FPN_3x.yaml')

cfg.merge_from_list(CONFIG_OPTS)

cfg.MODEL.RETINANET.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.PANOPTIC_FPN.COMBINE.INSTANCES_CONFIDENCE_THRESH = CONFIDENCE_THRESHOLD

cfg.freeze()

predictor = DefaultPredictor(cfg)

context.user_data.model_handler = predictor

def handler(context, event):

# context.user_data.my_db_connection.query(...)

main.py

from inference demo

# Copyright onesixx. All rights reserved.

import os

CONFIG = [os.path.join('./param', filenm) for filenm in os.listdir('./param') if filenm.endswith('.py')][0]

CHKPNT = [os.path.join('./param', filenm) for filenm in os.listdir('./param') if filenm.endswith('.pth')][0]

SCORE_THRESHOLD = 0.9

DEVICE = "cpu" #"cuda:6"

PALETTE = "coco"

import json

with open('./annotations/instances_train2017.json', 'r') as f:

data = json.load(f)

categories = data['categories']

category_list = []

for category in categories:

category_list.append({'id':category['id'], 'name':category['name']})

classes = category_list

# classes = [

# { "id": 1, "name": "person" },

# { "id": 2, "name": "bicycle" },

# ...

# { "id":90, "name": "toothbrush" }

# ]

import mmcv

from mmdet.apis import init_detector

from mmdet.apis import inference_detector

import io

import base64

from PIL import Image

import numpy as np

def init_context(context):

context.logger.info("Init context... 0%") # --------------------------------

model = init_detector(CONFIG, CHECKPOINT, device=DEVICE)

context.user_data.model = model

context.logger.info("Init context... 100%") # ------------------------------

def handler(context, event):

context.logger.info("Run sixx model")

data = event.body

buf = io.BytesIO(base64.b64decode(data["image"]))

# threshold = float(data.get("threshold", SCORE_THRESHOLD))

# context.user_data.model.conf = threshold

image = Image.open(buf)

imgArray = np.array(image)

result = inference_detector(context.user_data.model, imgArray)

if isinstance(result, tuple):

bbox_result, segm_result = result

if isinstance(segm_result, tuple):

segm_result = segm_result[0] # discard the `dim`

else:

bbox_result, segm_result = result, None

img = image.copy()

bboxes = np.vstack(bbox_result)

labels = [

np.full(bbox.shape[0], i, dtype=np.int32)

for i, bbox in enumerate(bbox_result)

]

labels = np.concatenate(labels)

scores = bboxes[:, -1]

inds = scores > SCORE_THRESHOLD

scores = scores[inds] # confidence

labels = labels[inds] # label

bboxes = bboxes[inds, :] # points

if show_mask and segm_result is not None:

segms = mmcv.concat_list(segm_result)

segms = [segms[i] for i in np.where(inds)[0]]

if palette is None:

palette = color_val_iter()

colors = [next(palette) for _ in range(len(segms))]

encoded_results = []

if bboxes.shape[0] > 0:

for i in range(bboxes.shape[0]):

encoded_results.append({

'confidence': scores[i],

'label': classes[labels[i]]['name'],

'points': bboxes[i].tolist(),

'type': 'rectangle'

})

return context.Response(

body=json.dumps(encoded_results),

headers={},

content_type='application/json',

status_code=200

)

2-2 아래 프로세스를 위해 handler에 entry point를 정의하고, main.py에 넣는다.

- accept incoming HTTP requests

- run inference

- reply with detection results

# Copyright onesixx. All rights reserved.

import os

# CONFIG = [os.path.join("./param", filenm) for filenm in os.listdir('./param') if filenm.endswith('.py')][0]

# CHKPNT = [os.path.join("./param", filenm) for filenm in os.listdir('./param') if filenm.endswith('.pth')][0]

CONFIG = '/opt/nuclio/param/faster_rcnn_r50_fpn_1x_coco_001.py'

CHKPNT = '/opt/nuclio/param/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth'

SCORE_THRESHOLD = 0.9

DEVICE = "cpu" #"cuda:6"

PALETTE = "coco"

import mmcv

from mmdet.apis import init_detector

from mmdet.apis import inference_detector

import io

import base64

from PIL import Image

import numpy as np

import yaml

import json

#from model_loader import ModelLoader

with open("/opt/nuclio/function.yaml", 'rb') as function_file:

functionconfig = yaml.safe_load(function_file)

# with open("./function.yaml", 'rb') as function_file: functionconfig = yaml.safe_load(function_file)

labels_spec = functionconfig['metadata']['annotations']['spec']

classes = eval(labels_spec)

# classes = [

# { "id": 1, "name": "person" },

# { "id": 2, "name": "bicycle" },

# ...

# { "id":90, "name": "toothbrush" }

# ]

def init_context(context):

context.logger.info("Init context... 0%") # --------------------------------

model_handler = init_detector(CONFIG, CHKPNT, device=DEVICE)

#model_handler = ModelLoader(classes)

context.user_data.model = model_handler

context.logger.info("Init context... 100%") # ------------------------------

def handler(context, event):

context.logger.info("Run sixx model")

data = event.body

buf = io.BytesIO(base64.b64decode(data["image"]))

# threshold = float(data.get("threshold", SCORE_THRESHOLD))

# context.user_data.model.conf = threshold

# buf = './imgs/demo.jpg'

image = Image.open(buf)

imgArray = np.array(image)

# result = inference_detector(model_handler, imgArray)

result = inference_detector(context.user_data.model, imgArray)

if isinstance(result, tuple):

bbox_result, segm_result = result

if isinstance(segm_result, tuple):

segm_result = segm_result[0] # discard the `dim`

else:

bbox_result, segm_result = result, None

img = image.copy()

bboxes = np.vstack(bbox_result)

labels = [

np.full(bbox.shape[0], i, dtype=np.int32)

for i, bbox in enumerate(bbox_result)

]

labels = np.concatenate(labels)

scores = bboxes[:, -1]

inds = scores > SCORE_THRESHOLD

scores = scores[inds] # confidence

labels = labels[inds] # label

bboxes = bboxes[inds, :] # points

# if show_mask and segm_result is not None:

# segms = mmcv.concat_list(segm_result)

# segms = [segms[i] for i in np.where(inds)[0]]

# if palette is None:

# palette = color_val_iter()

# colors = [next(palette) for _ in range(len(segms))]

encoded_results = []

if bboxes.shape[0] > 0:

for i in range(bboxes.shape[0]):

encoded_results.append({

'confidence': float(scores[i]),

'label': classes[labels[i]]['name'],

'points': bboxes[i][:4].tolist(),

'type': 'rectangle'

})

return context.Response(

body=json.dumps(encoded_results),

headers={},

content_type='application/json',

status_code=200

)

# Copyright onesixx. All rights reserved.

import os

# CONFIG = [os.path.join("./param", filenm) for filenm in os.listdir('./param') if filenm.endswith('.py')][0]

# CHKPNT = [os.path.join("./param", filenm) for filenm in os.listdir('./param') if filenm.endswith('.pth')][0]

CONFIG = '/opt/nuclio/param/faster_rcnn_r50_fpn_1x_coco_001.py'

CHKPNT = '/opt/nuclio/param/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth'

SCORE_THRESHOLD = 0.9

DEVICE = "cpu" #"cuda:6"

PALETTE = "coco"

import mmcv

from mmdet.apis import init_detector

from mmdet.apis import inference_detector

import io

import base64

from PIL import Image

import numpy as np

import yaml

import json

#from model_loader import ModelLoader

with open("/opt/nuclio/function.yaml", 'rb') as function_file:

functionconfig = yaml.safe_load(function_file)

# with open("./function.yaml", 'rb') as function_file: functionconfig = yaml.safe_load(function_file)

labels_spec = functionconfig['metadata']['annotations']['spec']

classes = labels_spec

# classes = [

# { "id": 1, "name": "person" },

# { "id": 2, "name": "bicycle" },

# ...

# { "id":90, "name": "toothbrush" }

# ]

def init_context(context):

context.logger.info("Init context... 0%") # --------------------------------

model_handler = init_detector(CONFIG, CHKPNT, device=DEVICE)

#model_handler = ModelLoader(classes)

context.user_data.model = model_handler

context.logger.info("Init context... 100%") # ------------------------------

def handler(context, event):

context.logger.info("Run sixx model")

data = event.body

buf = io.BytesIO(base64.b64decode(data["image"]))

# threshold = float(data.get("threshold", SCORE_THRESHOLD))

# context.user_data.model.conf = threshold

# buf = './imgs/demo.jpg'

image = Image.open(buf)

imgArray = np.array(image)

# result = inference_detector(model, imgArray)

result = inference_detector(context.user_data.model, imgArray)

if isinstance(result, tuple):

bbox_result, segm_result = result

if isinstance(segm_result, tuple):

segm_result = segm_result[0] # discard the `dim`

else:

bbox_result, segm_result = result, None

img = image.copy()

bboxes = np.vstack(bbox_result)

labels = [

np.full(bbox.shape[0], i, dtype=np.int32)

for i, bbox in enumerate(bbox_result)

]

labels = np.concatenate(labels)

scores = bboxes[:, -1]

inds = scores > SCORE_THRESHOLD

scores = scores[inds] # confidence

labels = labels[inds] # label

bboxes = bboxes[inds, :] # points

# if show_mask and segm_result is not None:

# segms = mmcv.concat_list(segm_result)

# segms = [segms[i] for i in np.where(inds)[0]]

# if palette is None:

# palette = color_val_iter()

# colors = [next(palette) for _ in range(len(segms))]

encoded_results = []

if bboxes.shape[0] > 0:

for i in range(bboxes.shape[0]):

encoded_results.append({

'confidence': float(scores[i]),

'label': classes[labels[i]]['name'],

'points': bboxes[i][:4].tolist(),

'type': 'rectangle'

})

return context.Response(

body=json.dumps(encoded_results),

headers={},

content_type='application/json',

status_code=200

)

from model_handler import ModelHandler

3. deploy

새로운 serverless 함수를 사용하기 위해서는

(위 Builtin model에서 했던것처럼) nuctl 명령어로 deploy를 해야한다.

- function.yaml

- main.py

- model_handler.py

방법1)

$ nuctl deploy --project-name cvat \\ --path cvat/serverless/sixx/mmdet/faster_rcnn_x/nuclio/ \\ --volume `pwd`/serverless/common:/opt/nuclio/common \\ --platform local

방법2)

$ serverless/deploy_cpu.sh \\ serverless/pytorch/facebookresearch/detectron2/retinanet/

$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 264a2739eeb6 cvat/sixx.mm.fast:latest "conda run -n mmdet3…" 47 seconds ago Up 46 seconds (healthy) 0.0.0.0:49377->8080/tcp nuclio-nuclio-mmdet-faster-rcnn-x e71c29400634 gcr.io/iguazio/alpine:3.15 "/bin/sh -c '/bin/sl…" 5 hours ago Up 5 hours nuclio-local-storage-reader

Issue

https://github.com/opencv/cvat/issues/3457 : Steps for custom model deployment

http://How to upload DL which built by myself? : How to upload DL which built by myself?

https://github.com/opencv/cvat/issues/5551 : Mmdetection MaskRCNN serverless support for semi-automatic annotation

https://github.com/opencv/cvat/issues/4909: Load my own Yolov5 model on cvat by nuclio

mmdetection 모델변환

https://mmdetection.readthedocs.io/en/latest/useful_tools.html#model-conversion

Exporting MMDetection models to ONNX format

https://medium.com/axinc-ai/exporting-mmdetection-models-to-onnx-format-3ec839c38ff

openvino : https://da2so.tistory.com/63

Serving pre-trained ML/DL models

https://docs.mlrun.org/en/stable/tutorial/03-model-serving.html#serving-pre-trained-ml-dl-models

docker 정리

더 이상 컨테이너에 연결되지 않고, 태그가 없어진 이미지를 Dangling image

https://sarc.io/index.php/aws/1921-docker

docker rmi $(docker images -f "dangling=true" -q)

docker exec -it nuclio-nuclio-mmdet-faster-rcnn-x bash