Nuclio Deploying Functions

Builtin Model 모델 배포

Serverless tutorial by CVAThttps://opencv.github.io/cvat/docs/manual/advanced/serverless-tutorial/

CVAT에서 이미 만들어진 (serverless) Function을 바로 배포만 하면된다.

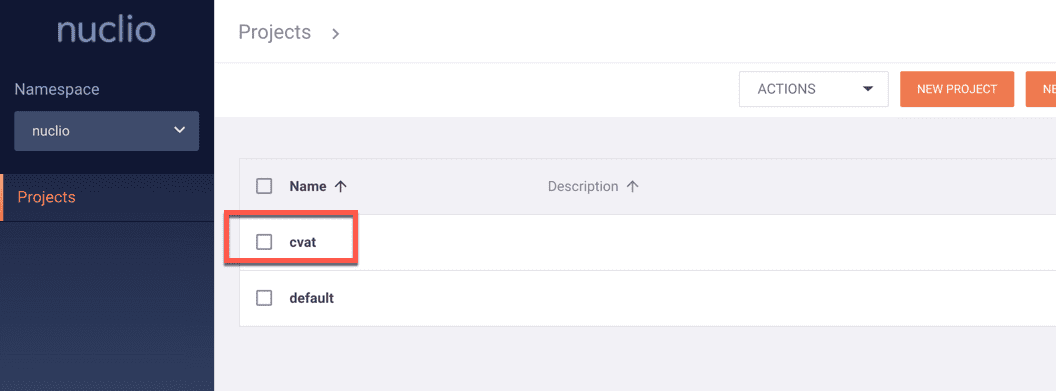

cvat 프로젝트를 생성

여기에 serverless function과 model을 저장

$ nuctl create project cvat

Deploy function

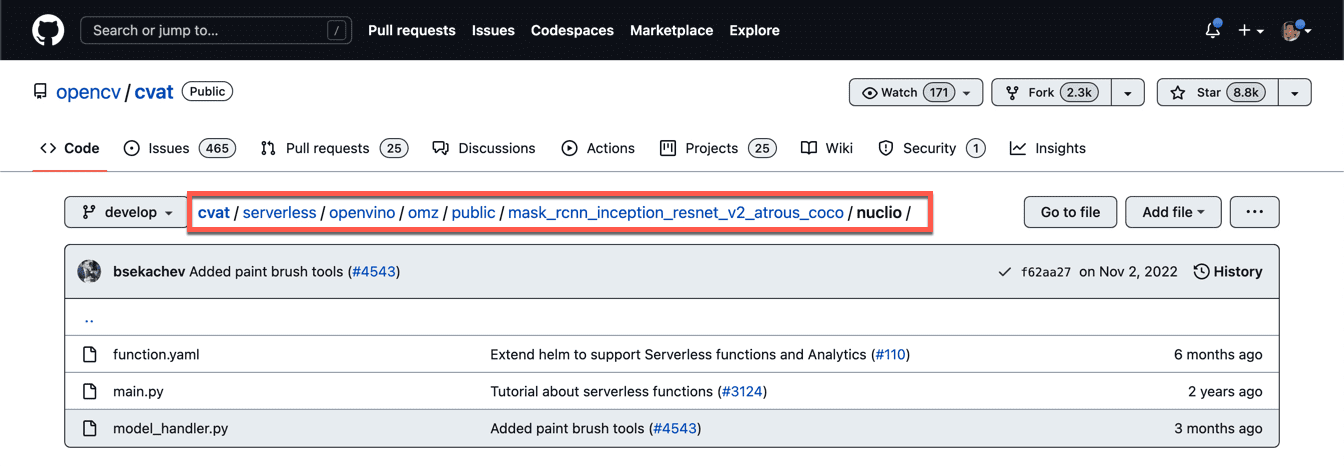

CVAT에서 제공하는 배포가능한 serverless function 및 모델 ( https://github.com/openvinotoolkit/cvat/tree/develop/serverless)

$ nuctnuctl deploy \\ --project-name cvat \\ --path serverless/openvino/omz/public/mask_rcnn_inception_resnet_v2_atrous_coco/nuclio \\ --volume `pwd`/serverless/common:/opt/nuclio/common \\ --platform local

로컬 환경에서 배포하는 경우에는 —-platform local 옵션을 추가

–http-trigger-service-type NodePort

# 다른 방법 $ serverless/deploy_cpu.sh \\ serverless/openvino/omz/public/mask_rcnn_inception_resnet_v2_atrous_coco/nuclio $ serverless/deploy_gpu.sh \\ serverless/tensorflow/matterport/mask_rcnn

23.02.09 17:11:16.786 nuctl (I) Deploying function {"name": ""}

23.02.09 17:11:16.787 nuctl (I) Building {"builderKind": "docker", "versionInfo": "Label: 1.8.14, Git commit: cbb0774230996a3eb4621c1a2079e2317578005b, OS: linux, Arch: amd64, Go version: go1.17.8", "name": ""}

23.02.09 17:11:17.014 nuctl (I) Staging files and preparing base images

23.02.09 17:11:17.015 nuctl (W) Python 3.6 runtime is deprecated and will soon not be supported. Please migrate your code and use Python 3.7 runtime (`python:3.7`) or higher

23.02.09 17:11:17.015 nuctl (I) Building processor image {"registryURL": "", "imageName": "cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco:latest"}

23.02.09 17:11:17.015 nuctl.platform.docker (I) Pulling image {"imageName": "quay.io/nuclio/handler-builder-python-onbuild:1.8.14-amd64"}

23.02.09 17:11:20.175 nuctl.platform.docker (I) Pulling image {"imageName": "quay.io/nuclio/uhttpc:0.0.1-amd64"}

23.02.09 17:11:24.389 nuctl.platform (I) Building docker image {"image": "cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco:latest"}

23.02.09 17:17:55.663 nuctl.platform (I) Pushing docker image into registry {"image": "cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco:latest", "registry": ""}

23.02.09 17:17:55.663 nuctl.platform (I) Docker image was successfully built and pushed into docker registry {"image": "cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco:latest"}

23.02.09 17:17:55.663 nuctl (I) Build complete {"result": {"Image":"cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco:latest","UpdatedFunctionConfig":{"metadata":{"name":"openvino-mask-rcnn-inception-resnet-v2-atrous-coco","namespace":"nuclio","labels":{"nuclio.io/project-name":"cvat"},"annotations":{"framework":"openvino","name":"Mask RCNN","spec":"[\

{ \\"id\\": 1, \\"name\\": \\"person\\" },\

{ \\"id\\": 2, \\"name\\": \\"bicycle\\" },\

{ \\"id\\": 3, \\"name\\": \\"car\\" },\

{ \\"id\\": 4, \\"name\\": \\"motorcycle\\" },\

{ \\"id\\": 5, \\"name\\": \\"airplane\\" },\

{ \\"id\\": 6, \\"name\\": \\"bus\\" },\

{ \\"id\\": 7, \\"name\\": \\"train\\" },\

{ \\"id\\": 8, \\"name\\": \\"truck\\" },\

{ \\"id\\": 9, \\"name\\": \\"boat\\" },\

{ \\"id\\":10, \\"name\\": \\"traffic_light\\" },\

{ \\"id\\":11, \\"name\\": \\"fire_hydrant\\" },\

{ \\"id\\":13, \\"name\\": \\"stop_sign\\" },\

{ \\"id\\":14, \\"name\\": \\"parking_meter\\" },\

{ \\"id\\":15, \\"name\\": \\"bench\\" },\

{ \\"id\\":16, \\"name\\": \\"bird\\" },\

{ \\"id\\":17, \\"name\\": \\"cat\\" },\

{ \\"id\\":18, \\"name\\": \\"dog\\" },\

{ \\"id\\":19, \\"name\\": \\"horse\\" },\

{ \\"id\\":20, \\"name\\": \\"sheep\\" },\

{ \\"id\\":21, \\"name\\": \\"cow\\" },\

{ \\"id\\":22, \\"name\\": \\"elephant\\" },\

{ \\"id\\":23, \\"name\\": \\"bear\\" },\

{ \\"id\\":24, \\"name\\": \\"zebra\\" },\

{ \\"id\\":25, \\"name\\": \\"giraffe\\" },\

{ \\"id\\":27, \\"name\\": \\"backpack\\" },\

{ \\"id\\":28, \\"name\\": \\"umbrella\\" },\

{ \\"id\\":31, \\"name\\": \\"handbag\\" },\

{ \\"id\\":32, \\"name\\": \\"tie\\" },\

{ \\"id\\":33, \\"name\\": \\"suitcase\\" },\

{ \\"id\\":34, \\"name\\": \\"frisbee\\" },\

{ \\"id\\":35, \\"name\\": \\"skis\\" },\

{ \\"id\\":36, \\"name\\": \\"snowboard\\" },\

{ \\"id\\":37, \\"name\\": \\"sports_ball\\" },\

{ \\"id\\":38, \\"name\\": \\"kite\\" },\

{ \\"id\\":39, \\"name\\": \\"baseball_bat\\" },\

{ \\"id\\":40, \\"name\\": \\"baseball_glove\\" },\

{ \\"id\\":41, \\"name\\": \\"skateboard\\" },\

{ \\"id\\":42, \\"name\\": \\"surfboard\\" },\

{ \\"id\\":43, \\"name\\": \\"tennis_racket\\" },\

{ \\"id\\":44, \\"name\\": \\"bottle\\" },\

{ \\"id\\":46, \\"name\\": \\"wine_glass\\" },\

{ \\"id\\":47, \\"name\\": \\"cup\\" },\

{ \\"id\\":48, \\"name\\": \\"fork\\" },\

{ \\"id\\":49, \\"name\\": \\"knife\\" },\

{ \\"id\\":50, \\"name\\": \\"spoon\\" },\

{ \\"id\\":51, \\"name\\": \\"bowl\\" },\

{ \\"id\\":52, \\"name\\": \\"banana\\" },\

{ \\"id\\":53, \\"name\\": \\"apple\\" },\

{ \\"id\\":54, \\"name\\": \\"sandwich\\" },\

{ \\"id\\":55, \\"name\\": \\"orange\\" },\

{ \\"id\\":56, \\"name\\": \\"broccoli\\" },\

{ \\"id\\":57, \\"name\\": \\"carrot\\" },\

{ \\"id\\":58, \\"name\\": \\"hot_dog\\" },\

{ \\"id\\":59, \\"name\\": \\"pizza\\" },\

{ \\"id\\":60, \\"name\\": \\"donut\\" },\

{ \\"id\\":61, \\"name\\": \\"cake\\" },\

{ \\"id\\":62, \\"name\\": \\"chair\\" },\

{ \\"id\\":63, \\"name\\": \\"couch\\" },\

{ \\"id\\":64, \\"name\\": \\"potted_plant\\" },\

{ \\"id\\":65, \\"name\\": \\"bed\\" },\

{ \\"id\\":67, \\"name\\": \\"dining_table\\" },\

{ \\"id\\":70, \\"name\\": \\"toilet\\" },\

{ \\"id\\":72, \\"name\\": \\"tv\\" },\

{ \\"id\\":73, \\"name\\": \\"laptop\\" },\

{ \\"id\\":74, \\"name\\": \\"mouse\\" },\

{ \\"id\\":75, \\"name\\": \\"remote\\" },\

{ \\"id\\":76, \\"name\\": \\"keyboard\\" },\

{ \\"id\\":77, \\"name\\": \\"cell_phone\\" },\

{ \\"id\\":78, \\"name\\": \\"microwave\\" },\

{ \\"id\\":79, \\"name\\": \\"oven\\" },\

{ \\"id\\":80, \\"name\\": \\"toaster\\" },\

{ \\"id\\":81, \\"name\\": \\"sink\\" },\

{ \\"id\\":83, \\"name\\": \\"refrigerator\\" },\

{ \\"id\\":84, \\"name\\": \\"book\\" },\

{ \\"id\\":85, \\"name\\": \\"clock\\" },\

{ \\"id\\":86, \\"name\\": \\"vase\\" },\

{ \\"id\\":87, \\"name\\": \\"scissors\\" },\

{ \\"id\\":88, \\"name\\": \\"teddy_bear\\" },\

{ \\"id\\":89, \\"name\\": \\"hair_drier\\" },\

{ \\"id\\":90, \\"name\\": \\"toothbrush\\" }\

]\

","type":"detector"}},"spec":{"description":"Mask RCNN inception resnet v2 COCO via Intel OpenVINO","handler":"main:handler","runtime":"python:3.6","env":[{"name":"NUCLIO_PYTHON_EXE_PATH","value":"/opt/nuclio/common/openvino/python3"}],"resources":{"requests":{"cpu":"25m","memory":"1Mi"}},"image":"cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco:latest","targetCPU":75,"triggers":{"myHttpTrigger":{"class":"","kind":"http","name":"myHttpTrigger","maxWorkers":2,"workerAvailabilityTimeoutMilliseconds":10000,"attributes":{"maxRequestBodySize":33554432}}},"volumes":[{"volume":{"name":"volume-1","hostPath":{"path":"/raid/templates/cvat/serverless/common"}},"volumeMount":{"name":"volume-1","mountPath":"/opt/nuclio/common"}}],"build":{"image":"cvat.openvino.omz.public.mask_rcnn_inception_resnet_v2_atrous_coco","baseImage":"openvino/ubuntu18_dev:2020.2","directives":{"postCopy":[{"kind":"RUN","value":"apt update && DEBIAN_FRONTEND=noninteractive apt install --no-install-recommends -y python3-skimage"},{"kind":"RUN","value":"pip3 install \\"numpy<1.16.0\\""}],"preCopy":[{"kind":"USER","value":"root"},{"kind":"WORKDIR","value":"/opt/nuclio"},{"kind":"RUN","value":"ln -s /usr/bin/pip3 /usr/bin/pip"},{"kind":"RUN","value":"/opt/intel/openvino/deployment_tools/open_model_zoo/tools/downloader/downloader.py --name mask_rcnn_inception_resnet_v2_atrous_coco -o /opt/nuclio/open_model_zoo"},{"kind":"RUN","value":"/opt/intel/openvino/deployment_tools/open_model_zoo/tools/downloader/converter.py --name mask_rcnn_inception_resnet_v2_atrous_coco --precisions FP32 -d /opt/nuclio/open_model_zoo -o /opt/nuclio/open_model_zoo"}]},"codeEntryType":"image"},"platform":{"attributes":{"mountMode":"volume","restartPolicy":{"maximumRetryCount":3,"name":"always"}}},"readinessTimeoutSeconds":120,"securityContext":{},"eventTimeout":"60s"}}}}

23.02.09 17:17:55.671 nuctl (I) Cleaning up before deployment {"functionName": "openvino-mask-rcnn-inception-resnet-v2-atrous-coco"}

23.02.09 17:17:56.545 nuctl.platform (I) Waiting for function to be ready {"timeout": 120}

23.02.09 17:17:58.560 nuctl (I) Function deploy complete {"functionName": "openvino-mask-rcnn-inception-resnet-v2-atrous-coco", "httpPort": 49156, "internalInvocationURLs": ["172.17.0.7:8080"], "externalInvocationURLs": []}

모델확인 $ nuctl get functions NAMESPACE | NAME | PROJECT | STATE | REPLICAS | NODE PORT nuclio | openvino-mask-rcnn-inception-resnet-v2-atrous-coco | cvat | ready | 1/1 | 49156

Example using Python

https://nuclio.io/docs/latest/examples/#python-examples

Nuclio==>https://github.com/nuclio/nuclio/tree/master/hack/examples#python-examples

| Hello World | helloworld | A simple function that showcases unstructured logging and a structured response. |

| Encrypt | encrypt | A function that uses a 3rd-party Python package to encrypt the event body, and showcases build commands for installing both OS-level and Python packages. |

| FaceRecognizer | face | A function that uses Microsoft's face API, configured with function environment variables. The function uses 3rd-party Python packages, which are installed by using an inline configuration. |

| Sentiment Analysis | sentiments | A function that uses the vaderSentiment library to classify text strings into a negative or positive sentiment score. |

| TensorFlow | tensorflow | A function that uses the inception model of the TensorFlow open-source machine-learning library to classify images. The function demonstrates advanced uses of Nuclio with a custom base image, third-party Python packages, pre-loading data into function memory (the AI Model), structured logging, and exception handling. |

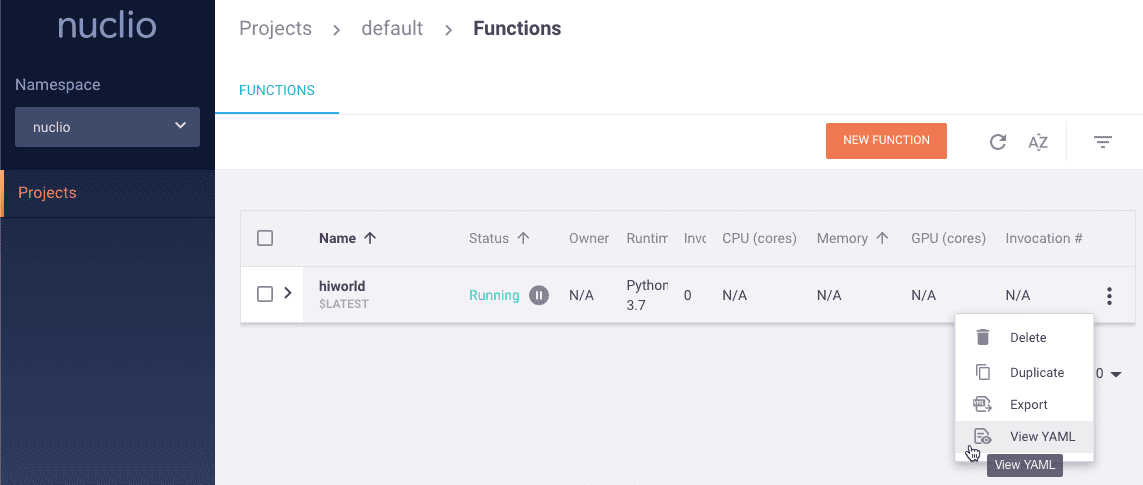

Hello World

Function-Configuration

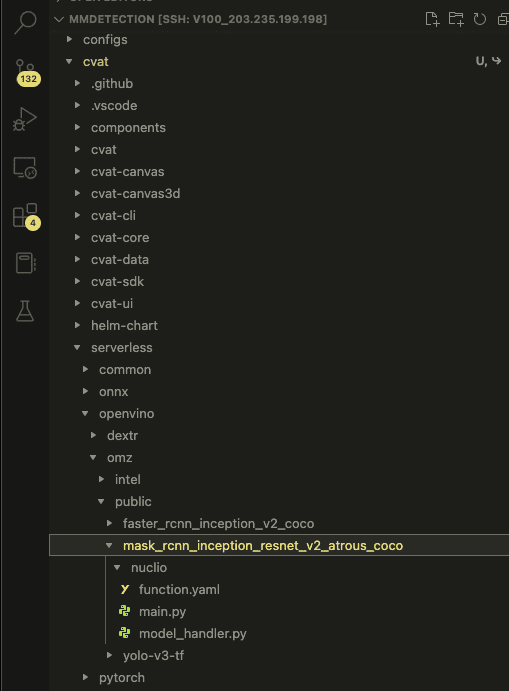

function.yaml, main.py, model_handler.py 준비

apiVersion: "nuclio.io/v1beta1" kind: "NuclioFunction" metadata: name: hiworld spec: # image: xxx:latest description: For Test Showcases unstructured logging and a structured response. runtime: "python" handler: main:handler minReplicas: 1 maxReplicas: 1

#from model_handler import ModelHandler

def init_context(context):

context.logger.info("Init context... 0%")

# model = ModelHandler()

# context.user_data.model = model

context.logger.info("Init context...100%")

def handler(context, event):

context.logger.info('This is an unstructured log')

return context.Response(

body='hihi, from nuclio :]',

headers={},

content_type='text/plain',

status_code=200

)

# import cv2

# import numpy as np

import os

#from model_loader import ModelLoader

#class ModelHandler:

# def __init__(self):

# def handle(self, image, points):

# class AttributesExtractorHandler:

# def __init__(self):

# def infer(self, image):

deploy functions

(mmlab38) ~/my/git/mmdetection/cvat$

# $ nuctl deploy hiworld \\

$ nuctl deploy \\

--path serverless/sixx/hiworld/nuclio \\

--volume `pwd`/serverless/common:/opt/nuclio/common \\

--platform local

23.02.16 13:44:32.874 nuctl (I) Deploying function {"name": "hiworld"}

23.02.16 13:44:32.874 nuctl (I) Building {"builderKind": "docker", "versionInfo": "Label: 1.8.14, Git commit: cbb0774230996a3eb4621c1a2079e2317578005b, OS: linux, Arch: amd64, Go version: go1.17.8", "name": "hiworld"}

23.02.16 13:44:33.090 nuctl (I) Staging files and preparing base images

23.02.16 13:44:33.091 nuctl (I) Building processor image {"registryURL": "", "imageName": "nuclio/processor-hiworld:latest"}

23.02.16 13:44:33.091 nuctl.platform.docker (I) Pulling image {"imageName": "quay.io/nuclio/handler-builder-python-onbuild:1.8.14-amd64"}

23.02.16 13:44:36.511 nuctl.platform.docker (I) Pulling image {"imageName": "quay.io/nuclio/uhttpc:0.0.1-amd64"}

23.02.16 13:44:40.783 nuctl.platform (I) Building docker image {"image": "nuclio/processor-hiworld:latest"}

23.02.16 13:45:33.889 nuctl.platform (I) Pushing docker image into registry {"image": "nuclio/processor-hiworld:latest", "registry": ""}

23.02.16 13:45:33.889 nuctl.platform (I) Docker image was successfully built and pushed into docker registry {"image": "nuclio/processor-hiworld:latest"}

23.02.16 13:45:33.889 nuctl (I) Build complete {"result": {"Image":"nuclio/processor-hiworld:latest","UpdatedFunctionConfig":{"metadata":{"name":"hiworld","namespace":"nuclio","labels":{"nuclio.io/project-name":"default"}},"spec":{"description":"For Test Showcases unstructured logging and a structured response.","handler":"hiworld:handler","runtime":"python:3.7","resources":{"requests":{"cpu":"25m","memory":"1Mi"}},"image":"nuclio/processor-hiworld:latest","minReplicas":1,"maxReplicas":1,"targetCPU":75,"triggers":{"default-http":{"class":"","kind":"http","name":"default-http","maxWorkers":1}},"volumes":[{"volume":{"name":"volume-1","hostPath":{"path":"/raid/templates/cvat/serverless/common"}},"volumeMount":{"name":"volume-1","mountPath":"/opt/nuclio/common"}}],"build":{"codeEntryType":"image"},"platform":{},"readinessTimeoutSeconds":120,"securityContext":{},"eventTimeout":""}}}}

23.02.16 13:45:33.896 nuctl (I) Cleaning up before deployment {"functionName": "hiworld"}

23.02.16 13:45:34.728 nuctl.platform (I) Waiting for function to be ready {"timeout": 120}

23.02.16 13:45:36.755 nuctl (I) Function deploy complete {"functionName": "hiworld", "httpPort": 49159, "internalInvocationURLs": ["172.17.0.9:8080"], "externalInvocationURLs": []}

$ nuctl get functions

NAMESPACE | NAME | PROJECT | STATE | REPLICAS | NODE PORT

nuclio | hiworld | default | ready | 1/1 | 49158

nuclio | openvino-dextr | cvat | ready | 1/1 | 49153

nuclio | openvino-mask-rcnn-inception... | cvat | ready | 1/1 | 49156

nuclio | openvino-omz-public-yolo-v3-tf | cvat | ready | 1/1 | 49155

nuclio | pth-foolwood-siammask | cvat | ready | 1/1 | 49157

nuclio | tf-matterport-mask-rcnn | cvat | error | 1/1 |

$ nuctl invoke hiworld \\

--method POST \\

--body '{"hihihi":"world"}' \\

--content-type "application/json"

23.02.16 11:08:15.114 nuctl.platform.invoker (I) Executing function {"method": "POST", "url": "http://:49158", "bodyLength": 17, "headers": {"Content-Type":["application/json"],"X-Nuclio-Log-Level":["info"],"X-Nuclio-Target":["helloworld"]}}

23.02.16 11:08:15.115 nuctl.platform.invoker (I) Got response {"status": "200 OK"}

23.02.16 11:08:15.115 nuctl (I) >>> Start of function logs

23.02.16 11:08:15.115 helloworld (I) This is an unstrucured log {"time": 1676513295115.0264}

23.02.16 11:08:15.115 nuctl (I) <<< End of function logs

> Response headers:

Server = nuclio

Date = Thu, 16 Feb 2023 02:08:14 GMT

Content-Type = application/text

Content-Length = 21

> Response body:

Hello, from Nuclio :]

metadata:

name: hiworld

labels:

nuclio.io/project-name: default

spec:

description: "For Test Showcases unstructured logging and a structured response."

handler: "hiworld:handler"

runtime: "python:3.7"

resources:

requests:

cpu: 25m

memory: 1Mi

image: "nuclio/processor-hiworld:latest"

minReplicas: 1

maxReplicas: 1

targetCPU: 75

triggers:

default-http:

class: ""

kind: http

name: default-http

maxWorkers: 1

volumes:

- volume:

name: volume-1

hostPath:

path: /home/oschung_skcc/my/git/mmdetection/cvat/serverless/common

volumeMount:

name: volume-1

mountPath: /opt/nuclio/common

build:

codeEntryType: image

timestamp: 1676523194

platform: {}

readinessTimeoutSeconds: 120

securityContext: {}

eventTimeout: ""

version: 1

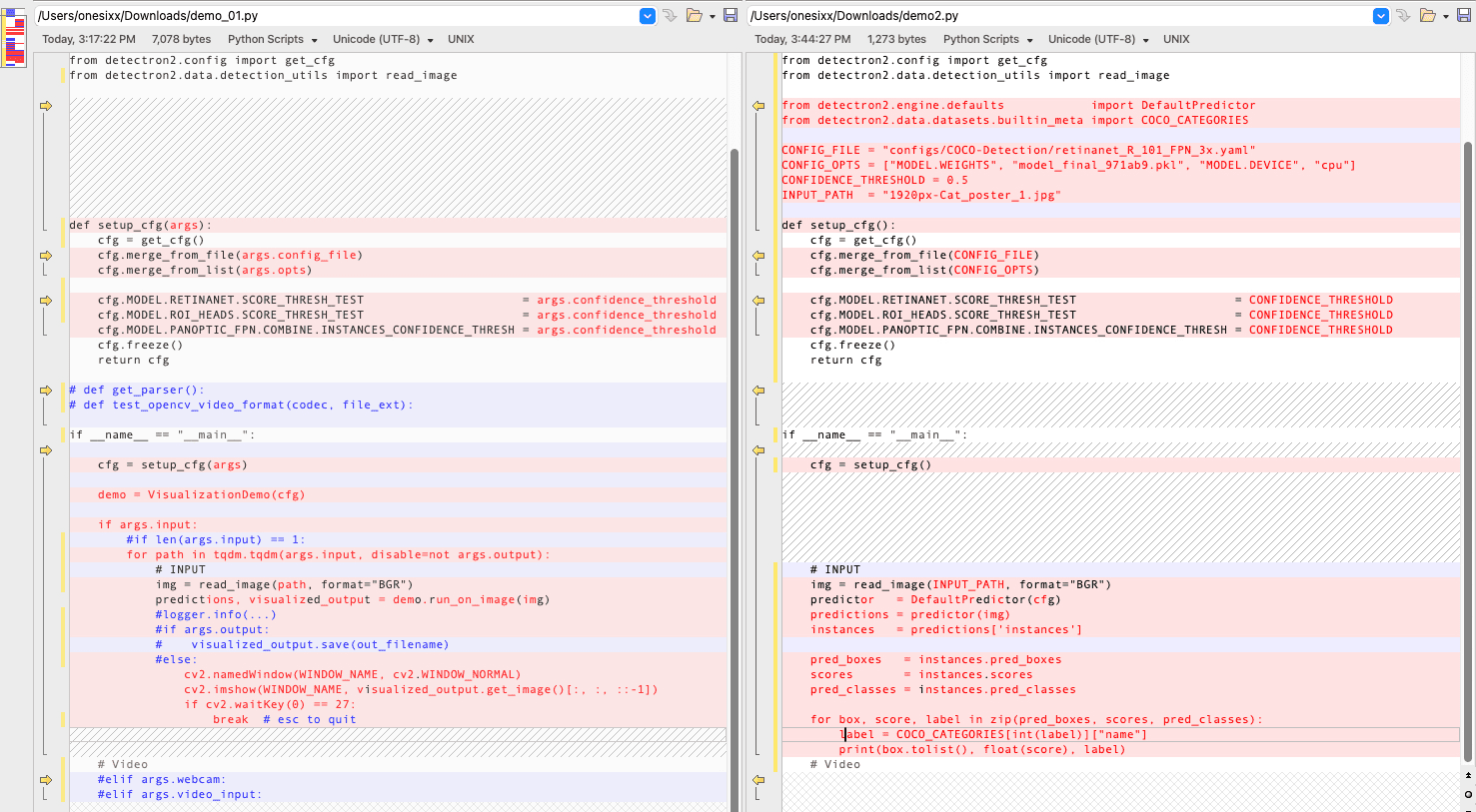

모델배포 : custom model - detectron2

https://opencv.github.io/cvat/docs/manual/advanced/serverless-tutorial/#adding-your-own-dl-models

detectron2설치후 Local에서 DL모델 돌려보기

$ python demo/demo.py \\ --config-file configs/COCO-Detection/retinanet_R_101_FPN_3x.yaml \\ --input 1920px-Cat_poster_1.jpg \\ --output out.jpg \\ --opts MODEL.WEIGHTS model_final_971ab9.pkl MODEL.DEVICE cpu

from detectron2.config import get_cfg

from detectron2.data.detection_utils import read_image

from detectron2.engine.defaults import DefaultPredictor

from detectron2.data.datasets.builtin_meta import COCO_CATEGORIES

CONFIG_FILE = "configs/COCO-Detection/retinanet_R_101_FPN_3x.yaml"

CONFIG_OPTS = ["MODEL.WEIGHTS", "model_final_971ab9.pkl", "MODEL.DEVICE", "cpu"]

CONFIDENCE_THRESHOLD = 0.5

INPUT_PATH = "1920px-Cat_poster_1.jpg"

def setup_cfg():

cfg = get_cfg()

cfg.merge_from_file(CONFIG_FILE)

cfg.merge_from_list(CONFIG_OPTS)

cfg.MODEL.RETINANET.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.PANOPTIC_FPN.COMBINE.INSTANCES_CONFIDENCE_THRESH = CONFIDENCE_THRESHOLD

cfg.freeze()

return cfg

if __name__ == "__main__":

cfg = setup_cfg()

\t# INPUT\t

img = read_image(INPUT_PATH, format="BGR")

predictor = DefaultPredictor(cfg)

predictions = predictor(img)

instances = predictions['instances']

pred_boxes = instances.pred_boxes

scores = instances.scores

pred_classes = instances.pred_classes

\t

for box, score, label in zip(pred_boxes, scores, pred_classes):

label = COCO_CATEGORIES[int(label)]["name"]

print(box.tolist(), float(score), label)

\t# Video

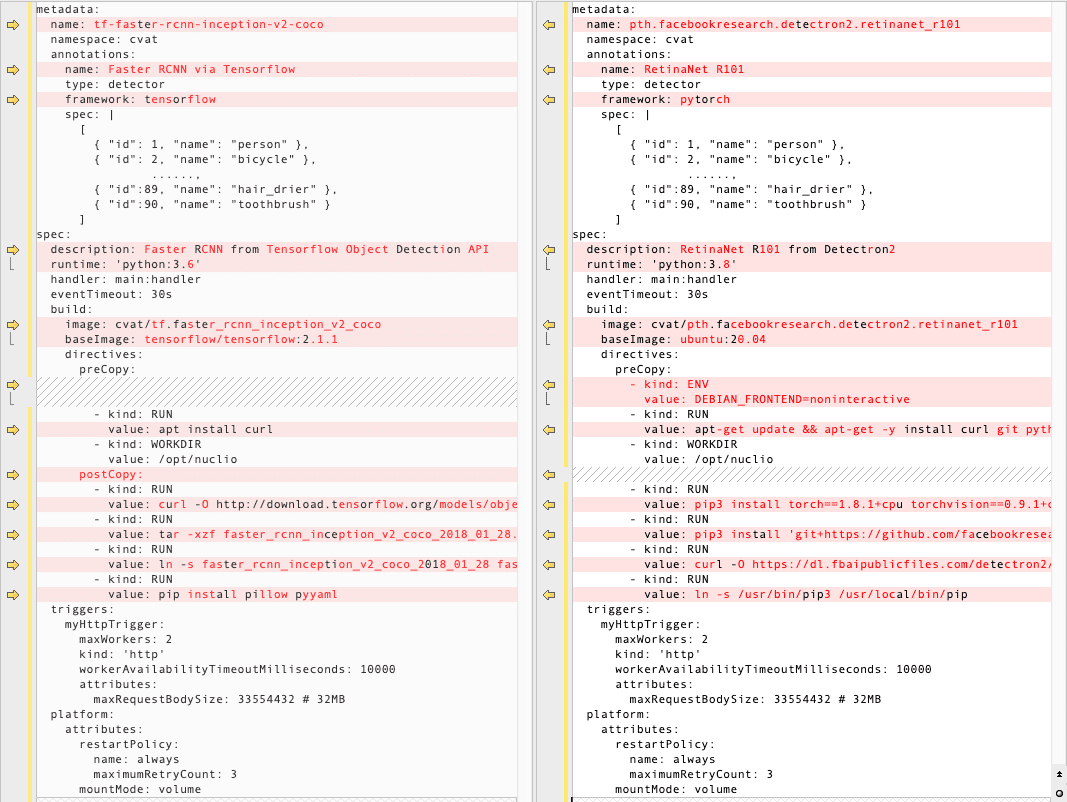

function.yaml

1) Annotation 위해 CVAT이 활용할 serverless function 준비

함수의 이름 정하기

파라미터 전체설명 : https://nuclio.io/docs/latest/reference/function-configuration/function-configuration-reference/

metadata.name : pth.facebookresearch.detectron2.retinanet_r101

metadata.annotations.name : 보여지는 이름

meradata.annotation.type: serverless function의 종류 . detector

metadata.annotation.framework: 기냥 정보성.. 특별히 정해진건 없으나..OpenVINO, PyTorch, TensorFlow, etc.

metadata:

name: pth.facebookresearch.detectron2.retinanet_r101

namespace: cvat

annotations:

name: RetinaNet R101

type: detector

framework: pytorch

spec: |

[

{ "id": 1, "name": "person" },

{ "id": 2, "name": "bicycle" },

...

{ "id":89, "name": "hair_drier" },

{ "id":90, "name": "toothbrush" }

]

spec:

description: RetinaNet R101 from Detectron2

runtime: 'python:3.8'

handler: main:handler

eventTimeout: 30s

build:

image: cvat/pth.facebookresearch.detectron2.retinanet_r101

baseImage: ubuntu:20.04

directives:

preCopy:

- kind: ENV

value: DEBIAN_FRONTEND=noninteractive

- kind: RUN

value: apt-get update && apt-get -y install curl git python3 python3-pip

- kind: WORKDIR

value: /opt/nuclio

- kind: RUN

value: pip3 install torch==1.8.1+cpu torchvision==0.9.1+cpu torchaudio==0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

- kind: RUN

value: pip3 install 'git+https://github.com/facebookresearch/[email protected]'

- kind: RUN

value: curl -O https://dl.fbaipublicfiles.com/detectron2/COCO-Detection/retinanet_R_101_FPN_3x/190397697/model_final_971ab9.pkl

- kind: RUN

value: ln -s /usr/bin/pip3 /usr/local/bin/pip

triggers:

myHttpTrigger:

maxWorkers: 2

kind: 'http'

workerAvailabilityTimeoutMilliseconds: 10000

attributes:

maxRequestBodySize: 33554432 # 32MB

platform:

attributes:

restartPolicy:

name: always

maximumRetryCount: 3

mountMode: volume

외부에서 해당 모델을 통해 접근을 위한 설정

nuclio function의 포트번호와 cvat network를 지정

spec.triggers.myHttpTrigger.attributes에 특정 port를 추가하고, spec.platform.attributes 에 도커 컴포즈를 실행했을 때 생성된 도커 네트워크(cvat_cvat)를 추가.

triggers:

myHttpTrigger:

maxWorkers: 2

kind: 'http'

workerAvailabilityTimeoutMilliseconds: 10000

attributes:

maxRequestBodySize: 33554432

port: 33000 # 추가포트

platform:

attributes:

restartPolicy:

name: always

maximumRetryCount: 3

mountMode: volume

network: cvat_cvat # 추가 도커네트웍

2. 로컬에서 DL모델을 실행하기 위한 소스코드를 Nuclio 플랫폼에 적용

2-1 모델을 메모리에 로딩 (init_context(context)함수를 사용하여)

def handler(context, event):

# context.user_data.my_db_connection.query(...)

def init_context(context):

# Create the DB connection under "context.user_data"

# setattr(context.user_data, 'my_db_connection', my_db.create_connection())

cfg = get_config('COCO-Detection/retinanet_R_101_FPN_3x.yaml')

cfg.merge_from_list(CONFIG_OPTS)

cfg.MODEL.RETINANET.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.PANOPTIC_FPN.COMBINE.INSTANCES_CONFIDENCE_THRESH = CONFIDENCE_THRESHOLD

cfg.freeze()

predictor = DefaultPredictor(cfg)

context.user_data.model_handler = predictor

2-2 아래 프로세스를 위해 handler에 entry point를 정의하고, main.py에 넣는다.

- accept incoming HTTP requests

- run inference

- reply with detection results

import json

import base64

import io

from PIL import Image

import yaml

from detectron2.config import get_cfg

from detectron2.data.detection_utils import convert_PIL_to_numpy

from detectron2.engine.defaults import DefaultPredictor

from detectron2.data.datasets.builtin_meta import COCO_CATEGORIES

CONFIG_FILE = "detectron2/configs/COCO-Detection/retinanet_R_101_FPN_3x.yaml"

CONFIG_OPTS = ["MODEL.WEIGHTS", "model_final_971ab9.pkl", "MODEL.DEVICE", "cpu"]

CONFIDENCE_THRESHOLD = 0.5

def handler(context, event):

context.logger.info("Run retinanet-R101 model")

data = event.body

buf = io.BytesIO(base64.b64decode(data["image"]))

threshold = float(data.get("threshold", CONFIDENCE_THRESHOLD)) #0.5))

image = convert_PIL_to_numpy(Image.open(buf), format="BGR")

predictions = context.user_data.model_handler(image)

instances = predictions['instances']

pred_boxes = instances.pred_boxes

scores = instances.scores

pred_classes = instances.pred_classes

results = []

for box, score, label in zip(pred_boxes, scores, pred_classes):

label = COCO_CATEGORIES[int(label)]["name"]

if score >= threshold:

results.append({

"confidence": str(float(score)),

"label": label,

"points": box.tolist(),

"type": "rectangle",

})

return context.Response(

body=json.dumps(results),

headers={},

content_type='application/json',

status_code=200)

def init_context(context):

context.logger.info("Init context... 0%")

cfg = get_config(CONFIG_FILE) # 'COCO-Detection/retinanet_R_101_FPN_3x.yaml')

cfg.merge_from_list(CONFIG_OPTS)

cfg.MODEL.RETINANET.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = CONFIDENCE_THRESHOLD

cfg.MODEL.PANOPTIC_FPN.COMBINE.INSTANCES_CONFIDENCE_THRESH = CONFIDENCE_THRESHOLD

cfg.freeze()

predictor = DefaultPredictor(cfg)

#context.user_data.model_handler = predictor

setattr(context.user_data, 'model_handler', predictor)

functionconfig = yaml.safe_load(open("/opt/nuclio/function.yaml"))

labels_spec = functionconfig['metadata']['annotations']['spec']

labels = {item['id']: item['name'] for item in json.loads(labels_spec)}

setattr(context.user_data, "labels", labels)

context.logger.info("Init context...100%")

3. deploy

새로운 serverless 함수를 사용하기 위해서는

(위 Builtin model에서 했던것처럼) nuctl 명령어로 deploy를 해야한다.

- function.yaml

- main.py

- model_handler.py

방법1)

$ nuctl deploy --project-name cvat \\ --path serverless/pytorch/facebookresearch/detectron2/retinanet/ \\ --volume `pwd`/serverless/common:/opt/nuclio/common \\ --platform local

방법2)

$ serverless/deploy_cpu.sh \\ serverless/pytorch/facebookresearch/detectron2/retinanet/

Issue

https://github.com/opencv/cvat/issues/3457 : Steps for custom model deployment

http://How to upload DL which built by myself? : How to upload DL which built by myself?

https://github.com/opencv/cvat/issues/5551 : Mmdetection MaskRCNN serverless support for semi-automatic annotation

https://github.com/opencv/cvat/issues/4909: Load my own Yolov5 model on cvat by nuclio

mmdetection 모델변환

https://mmdetection.readthedocs.io/en/latest/useful_tools.html#model-conversion

Exporting MMDetection models to ONNX format

https://medium.com/axinc-ai/exporting-mmdetection-models-to-onnx-format-3ec839c38ff

openvino : https://da2so.tistory.com/63

Serving pre-trained ML/DL models

https://docs.mlrun.org/en/stable/tutorial/03-model-serving.html#serving-pre-trained-ml-dl-models